人生第一桶金GET!!!

在闲鱼上挂自己近一个月了,终于接到一笔比较大的生意,写一个今日头条的爬虫。我报价1000竟然直接给我砍到650。哎,现在想想还是有点亏啊。不过比起第一单爬微博数据的生意还是好多了。(当时真的是太naive了,我竟然只要了80?!)不过,这毕竟是我人生中第一次接触社会,做生意,有些吃亏也是正常的,至少现在我有些了解行情了,下次报价不会再这么保守了,谈判时也不会这么学生气了。应当要学会握住自己手上的筹码,守住自己的价格底线。现在想想,这是他求我写软件,而不是我想着要赚钱,不过话又说回来,能凭自己的实力花两天时间挣到这笔小钱,也挺有自豪感的。

下面就来说说这项任务本身吧。

今日头条,我也是第一次知道它还有网页端,而且还有个高仿微博的“微头条”板块,这也是这次数据采集的对象,也正是因为它跟微博很像,我才会接这笔生意。

就 m 端 json 数据格式而言,我觉得还是后起之秀,今日头条写的比较规整,清晰(好爬)。而且值得一提的是,每页返回的json包中都会记录下一页的起始offset,而且这个offset还就是时间戳,这就使按日期搜索成为了可能。相较之下,微博采用page参数,把数据分页逻辑写在了后端,严格控制最多返回的页数,这就给爬取完整数据带来了很大的困难。(可能是微博被爬虫爬到烦了吧,现在的反爬机制越来越严了)

既然提到反爬机制,那就提一嘴,今日头条的反爬机制就只是通过referrer是否为本站判定,没有检查cookie或登录情况,而且3秒一爬,长时间内还比较稳定。这当然得夸一下。(当然是从数据采集者角度?

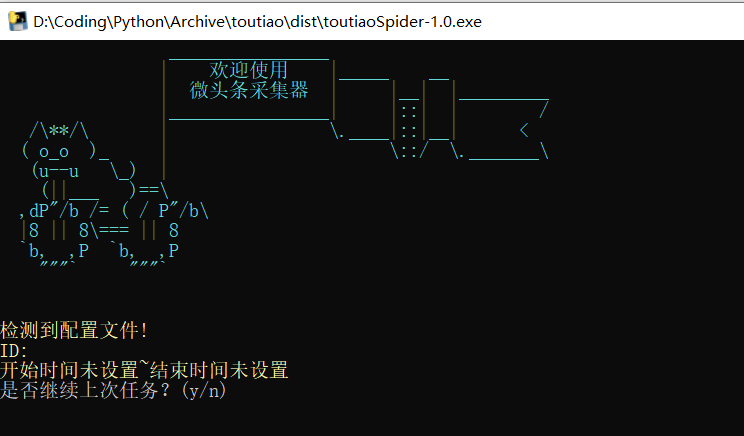

这也是我第一次做一个真正意义上的产品出来,不得不说pyinstaller一键封装真好用。由于gui费时费力,我也懒得做最后只能整个如此原味(丑陋)的命令行界面。

为了防止他拿到软件直接跑路,我还贴心地给他加了个时间限制,你说改系统时间就行了?其实我还设置了自动从服务器下载配置,他要爬数据,总得开网络吧。所以,虽然说是“试用版”,但它比“完整版”功能还多一点呢!?

下面附上主要代码。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255import requests

import json

import os

import re

import time

from colorama import Fore, Back, Style, init

# version 1.2 #

def toutiaoSpider(uid, stimestamp, etimestamp):

conf_dic = {'uid': uid, 'stimestamp': stimestamp, 'etimestamp': etimestamp}

page_count = 0

item_count = 0

offset = int(round(etimestamp * 1000))

while True:

response = getFeed(uid, offset)

page_count += 1

content_jsons = json.loads(response.content.decode("utf-8"))

print(Fore.BLUE + '[%d] %s' % (page_count, response.url))

date_str = time.strftime(

"%Y-%m-%d %H:%M", time.localtime(offset / 1000))

print(Fore.LIGHTCYAN_EX + '【当前页面时间】 ' + date_str)

# print(offset)

for item in content_jsons['data']:

item_json = json.loads(item['content'])

# 下面解析主要内容

text = item_json.get('content') # ##文本

if text is None:

continue

create_time = item_json['create_time'] # 时间戳

# 转换成localtime

time_local = time.localtime(create_time)

# 转换成新的时间格式(2016-05-05 20:28:54)

date_str = time.strftime("%Y-%m-%d %H-%M-%S", time_local) # ##时间

thread_id_str = item_json['thread_id_str']

username = item_json['user']['screen_name']

# 存入文件

path = './output/%s-%s/%s_%s/' % (uid,

username, date_str, thread_id_str[-3:])

if not os.path.exists(path):

try:

os.makedirs(path)

except Exception:

path = './output/%s/%s_%s/' % (uid,

date_str, thread_id_str[-3:])

os.makedirs(path)

else:

continue

read_count = int(item_json['read_count'])

rd_str = ''

if read_count >= 10000:

rd_str = '%.1f万' % float(read_count / 10000)

else:

rd_str = str(read_count)

with open(path + rd_str + '.txt', 'w', encoding='utf-8') as f:

f.write(text)

# 图片

img_tags = item_json.get('large_image_list')

if img_tags is not None:

for img_tag in img_tags:

img_url = img_tag.get('url')

getImg(img_url, path)

item_count += 1

print(Fore.BLUE + Back.LIGHTYELLOW_EX + '第 %d 页完成,共获取 %d 条内容' %

(page_count, item_count))

# 任务完成判定

last_off = offset

offset = content_jsons['offset']

if last_off != 0:

if last_off <= offset or offset <= int(round(stimestamp * 1000)):

print(Back.YELLOW + Fore.LIGHTMAGENTA_EX + "\n【任务完成!】")

os.remove('./config.json')

os.system("pause")

return

# 更新配置文件

with open('./config.json', 'w') as f:

conf_dic['etimestamp'] = offset / 1000

json.dump(conf_dic, f)

time.sleep(3)

def getFeed(uid, offset):

requests.packages.urllib3.disable_warnings()

base_url = 'https://profile.zjurl.cn/api/feed/profile/v1/'

param = {'category': 'profile_all',

'visited_uid': uid,

'offset': offset}

header = {

'referer': 'https://profile.zjurl.cn/rogue/ugc/profile/?user_id=%s' % uid,

'User-Agent': 'Mozilla/5.0 (Linux; U; Android 8.1.0; zh-CN; BLA-AL00 Build/HUAWEIBLA-AL00) AppleWebKit/537.36 (KHTML, like Gecko) Version/4.0 Chrome/57.0.2987.108 UCBrowser/11.9.4.974 UWS/2.13.1.48 Mobile Safari/537.36 AliApp(DingTalk/4.5.11) com.alibaba.android.rimet/10487439 Channel/227200 language/zh-CN'

}

i = 0

while i < 5:

try:

response = requests.get(

base_url, params=param, headers=header, verify=False, timeout=5)

if response.content == b'':

print('[EMPTY RETRY]')

i += 1

time.sleep(5)

continue

return response

except requests.exceptions.RequestException:

print('[RETRY]')

i += 1

time.sleep(5)

return None

# 获取图片

def getImg(url, path):

requests.packages.urllib3.disable_warnings()

header = {

'referer': 'https://profile.zjurl.cn/',

'User-Agent': 'Mozilla/5.0 (Linux; U; Android 8.1.0; zh-CN; BLA-AL00 Build/HUAWEIBLA-AL00) AppleWebKit/537.36 (KHTML, like Gecko) Version/4.0 Chrome/57.0.2987.108 UCBrowser/11.9.4.974 UWS/2.13.1.48 Mobile Safari/537.36 AliApp(DingTalk/4.5.11) com.alibaba.android.rimet/10487439 Channel/227200 language/zh-CN'

}

i = 0

while i < 5:

try:

response = requests.get(

url, headers=header, verify=False, timeout=5)

if response.content == b'':

print('[EMPTY RETRY]')

i += 1

time.sleep(5)

continue

# 解析成功

img_id = re.findall(

r'(?<=/)([^/]+?)(?=\?from)', response.url)[0].replace('.image', '.gif')

print(Fore.GREEN + '[IMG] ' + Style.RESET_ALL + response.url)

img_path = path + '/img/'

if not os.path.exists(img_path):

os.makedirs(img_path)

with open(img_path + img_id, 'wb') as f:

f.write(response.content)

return

except requests.exceptions.RequestException:

print('[RETRY]')

i += 1

time.sleep(5)

return None

def main_process():

print(Fore.CYAN + Style.BRIGHT +

" ________________ \n" +

" | 欢迎使用 |_____ __ \n" +

" | 微头条采集器 | |__| |_________ \n" +

" |________________| |::| | / \n" +

" /\**/\ | \.____|::|__| < \n" +

" ( o_o )_ | \::/ \._______\ \n" +

" (u--u \_) | \n" +

" (||___ )==\ \n" +

' ,dP"/b /= ( / P"/b\ \n' +

" |8 || 8\=== || 8 \n" +

" `b, ,P `b, ,P \n" +

' """` """` \n' +

' \n')

continue_flag = ''

if os.path.exists('./config.json'):

try:

with open("./config.json", 'r') as load_f:

load_conf = json.load(load_f)

uid = load_conf['uid']

stimestamp = load_conf['stimestamp']

etimestamp = load_conf['etimestamp']

sdate_str = time.strftime(

"%Y-%m-%d %H:%M", time.localtime(stimestamp))

edate_str = time.strftime(

"%Y-%m-%d %H:%M", time.localtime(etimestamp))

if stimestamp == 0:

sdate_str = '开始时间未设置'

if etimestamp == 0:

edate_str = '结束时间未设置'

print(Fore.YELLOW + Style.BRIGHT + '检测到配置文件!')

print(Fore.YELLOW + Style.BRIGHT + 'ID: %s\n%s~%s' %

(uid, sdate_str, edate_str))

continue_flag = input('是否继续上次任务?(y/n)')

except Exception:

pass

if continue_flag == 'y':

toutiaoSpider(uid, stimestamp, etimestamp)

return

while True:

uid = input('请输入需要采集的博主id,如(6767668116):')

print(Fore.LIGHTMAGENTA_EX + '您输入的是:' + uid)

stimestamp = 0

etimestamp = 0

sdate_str = ''

edate_str = ''

# 开始时间

while True:

try:

stime_str = input('请输入开始时间(yyyy mm dd HH MM):')

if stime_str != '':

t = time.strptime(stime_str, '%Y %m %d %H %M')

stimestamp = int(time.mktime(t))

sdate_str = time.strftime(

"%Y-%m-%d %H:%M", time.localtime(stimestamp))

print(Fore.LIGHTMAGENTA_EX + '您输入的是:' + sdate_str)

else:

stimestamp = 0

sdate_str = '开始时间未设置'

print(Fore.LIGHTMAGENTA_EX + sdate_str)

break

except Exception:

print(Fore.RED + '输入格式错误,请重新输入')

# 结束时间

while True:

try:

etime_str = input('请输入结束时间(yyyy mm dd HH MM):')

if etime_str != '':

t = time.strptime(etime_str, '%Y %m %d %H %M')

etimestamp = time.mktime(t)

edate_str = time.strftime(

"%Y-%m-%d %H:%M", time.localtime(etimestamp))

print(Fore.LIGHTMAGENTA_EX + '您输入的是:' + edate_str)

else:

etimestamp = 0

edate_str = '结束时间未设置'

print(Fore.LIGHTMAGENTA_EX + edate_str)

break

except Exception:

print(Fore.RED + '输入格式错误,请重新输入')

print(Fore.LIGHTMAGENTA_EX + '\n您的输入是:\n ID:%s \n %s ~ %s' %

(uid, sdate_str, edate_str))

verify = input('确定?(y/n)')

if verify == 'n':

continue

else:

break

# ######### 输入结束 ###########

# 生成配置文件

conf_dic = {'uid': uid, 'stimestamp': stimestamp, 'etimestamp': etimestamp}

with open('./config.json', 'w') as f:

json.dump(conf_dic, f)

toutiaoSpider(uid, stimestamp, etimestamp)

if __name__ == "__main__":

init(autoreset=True)

main_process()

人生第一桶金GET!!!